| Home | Research | Projects | About Me |

|

Published Works

|

|

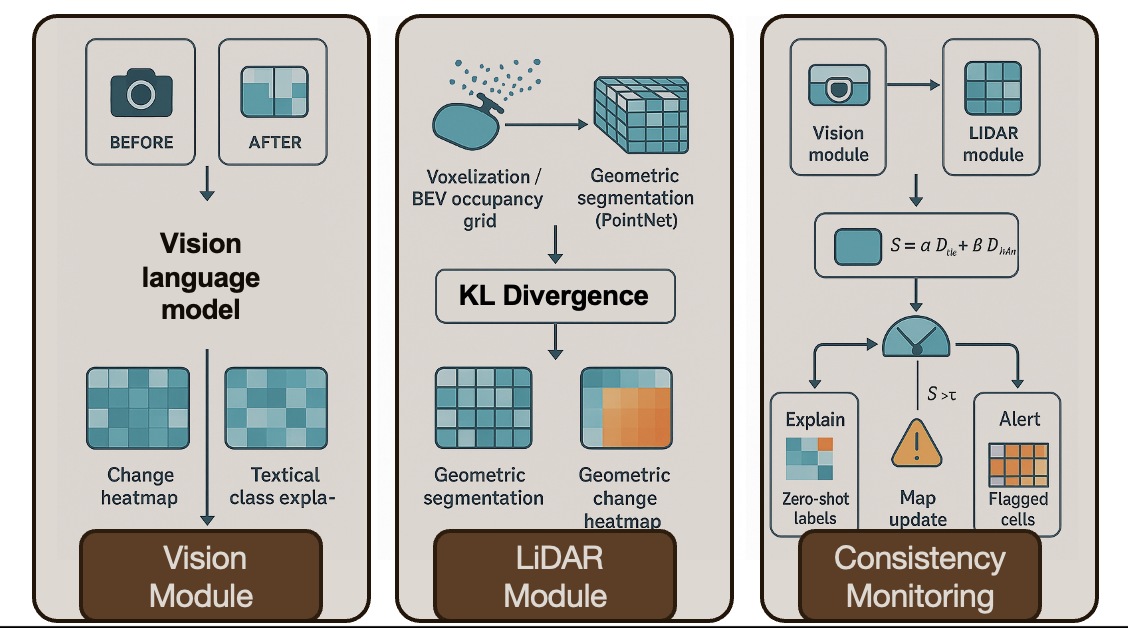

Huaze Liu *, Zihao Gao *, Adyasha Mohanty Proceedings of the Institute of Navigation GNSS+ conference (ION GNSS+ 2025) [Paper] / [Slides] / [Video] We designed an information-theoretic fusion framework that tracks consistency between Camera and LiDAR maps using normalized KL divergence. By treating semantic drift as a shift in probability distributions, the system allows for detecting when perception diverges from reality. This hybrid approach, which combines learned models with formal uncertainty constraints, proved resilient under degraded weather conditions where vision-only baselines typically collapse. |

|

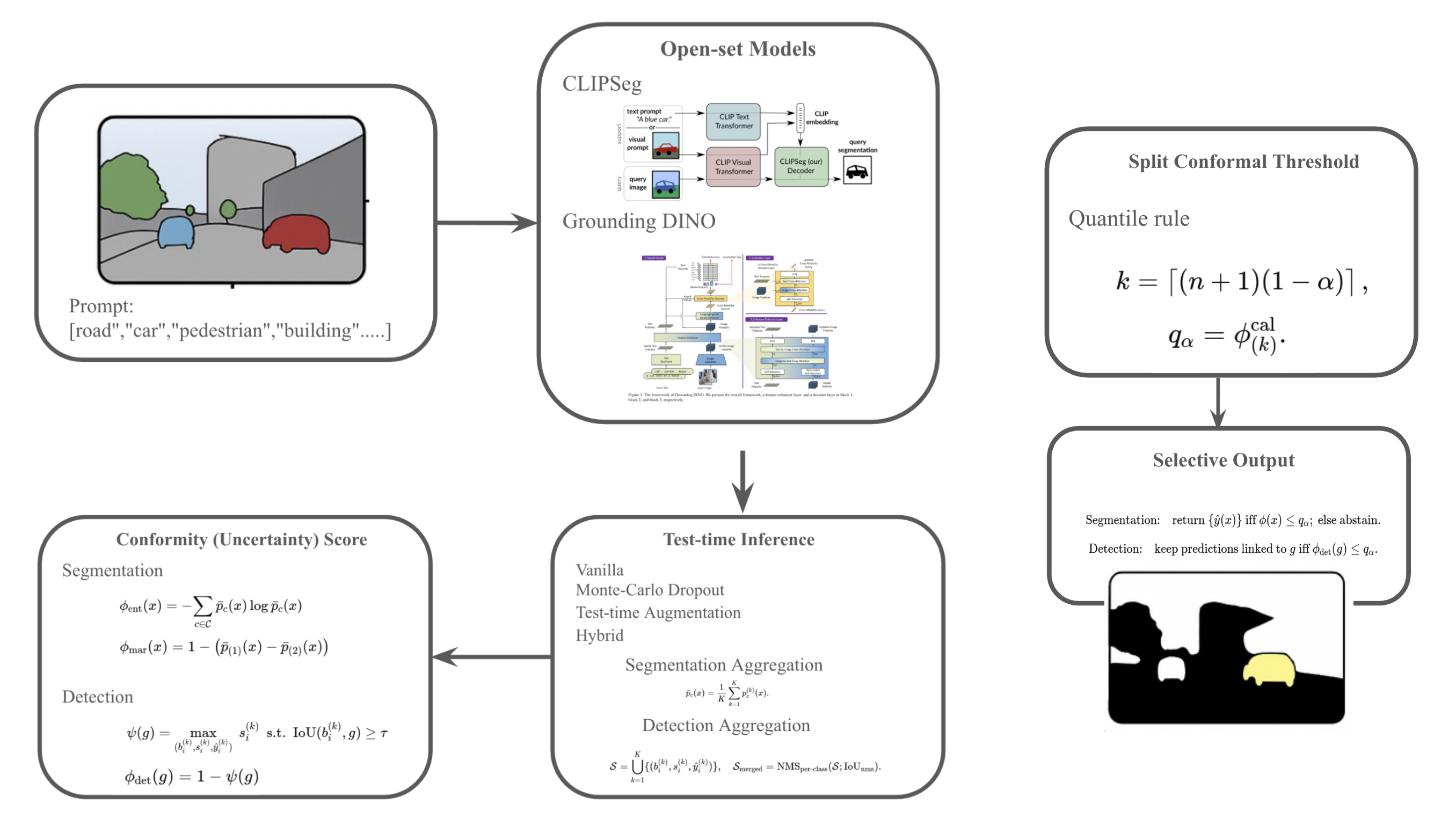

Huaze Liu, Adyasha Mohanty Southern California AI and Robotics Symposium 2025 [Paper] / [Paper (Long version)] To address the limitations of traditional uncertainty models in handling high-dimensional, non-Gaussian sensor noise, we applied Conformal Prediction (CP) to object detection and semantic segmentation. This method provides mathematically guaranteed bounds on prediction errors, offering a way to make data-driven perception provably reliable rather than just heuristically confident. |

|

Ongoing Research Project

|

|

|

Huaze Liu, and other cool collaboraters [Slides] (Under Preparation) I addressed domain generalization challenges in humanoid catching by enforcing an actor-critic observation asymmetry. While the critic retained privileged state access, the actor was trained on noisy, delayed observations to mimic real-world constraints. This information alignment allowed the UniTree G1 robot to learn robust strategies under physical control delays, achieving over 85% success in high-fidelity simulations. |